Whether it is AI or other disciplines, the process of learning and research constantly rethinks the history of the discipline, sums up the development status of the discipline, and finds out the most important concepts, which always makes people "one way to be consistent." Software engineer James Le recently summed up the ten deep learning methods that AI research must know based on his research experience, which is very instructive.

The 10 Deep Learning Methods AI PracTITIoners Need to Apply

Interest in machine learning has exploded in the past decade. You can see the shadow of machine learning in computer science projects, industry conferences, and media reports. But it seems that in all discussions about machine learning, people often confuse what AI can do with what they want AI to do.

Fundamentally, machine learning actually uses algorithms to extract information from raw data and present it in a type of model; then we use this model to infer other data that we have not yet modeled.

Neural networks, as a model of machine learning, have existed for at least 50 years. The basic unit of a neural network is a node that roughly mimics the nodes of biological neurons in the mammalian brain; the links between nodes (which also mimic the biological brain) evolve over time (training).

In the mid-1980s and early 1990s, many important neural network architectures have been made, but to get good results, you need powerful computing power and a large amount of data sets. Very unsatisfactory, so it also led to people's enthusiasm for machine learning gradually cooled down. At the beginning of the 21st century, the computer's computing power showed an exponential growth - the industry witnessed the "Cambrian explosion" of computer technology, which was almost unimaginable before. As an important architecture in this field, deep learning has won many important machine learning competitions in the decade of explosive growth in computing power. The heat of this bonus has not cooled down until this year; today, we see deep learning in every corner of machine learning.

Recently, I also started to read some academic papers on deep learning. Here are a few of the papers I have collected that have had a major impact on the development of deep learning:

1. Gradient-Based Learning Applied to Document RecogniTIon (1998)

Significance: Introducing Convolutional Neural Networks to the Machine Learning World

Author: Yann LeCun, Leon Bottou, Yoshua Bengio, and Patrick Haffner

2. Deep Boltzmann Machines (2009)

Significance: A new learning algorithm for the Boltzmann machine, which contains many hidden variable layers.

Author: Ruslan Salakhutdinov, Geoffrey Hinton

3, Building High-Level Features Using Large-Scale Unsupervised Learning (2012)

Significance: Resolved an issue where building high-level, class-specific feature detectors from unmarked data only.

Author: Quoc V. Le, Marc'Aurelio Ranzato, Rajat Monga, Matthieu Devin, Kai Chen, Greg S. Corrado, Jeff Dean, Andrew Y. Ng

4. DeCAF — A Deep ConvoluTIonal Activation Feature for Generic Visual Recognition (2013)

Significance: The release of an open source implementation of deep convolution activation features, DeCAF, and all relevant network parameters, enables visual researchers to conduct experiments in a series of visual concept learning paradigms.

Author: Jeff Donahue, Yangqing Jia, Oriol Vinyals, Judy Hoffman, Ning Zhang, Eric Tzeng, Trevor Darrell

5. Playing Atari with Deep Reinforcement Learning (2016)

Significance: Provides the first deep learning model that can be used to learn control strategies directly from high-dimensional sensory input using reinforcement learning.

Author:

Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, Martin Riedmiller (DeepMind Team)

In these studies and research, I found a lot of very interesting knowledge points. Here I will share ten deep learning methods that AI engineers may apply to their machine learning problems.

But first let us define what is "deep learning." For many people, the next definition of “deep learning†is really challenging, because its form has slowly changed dramatically over the past decade.

Let's first visually appreciate the status of "deep learning." The following figure is a diagram of the three concepts of AI, machine learning and deep learning.

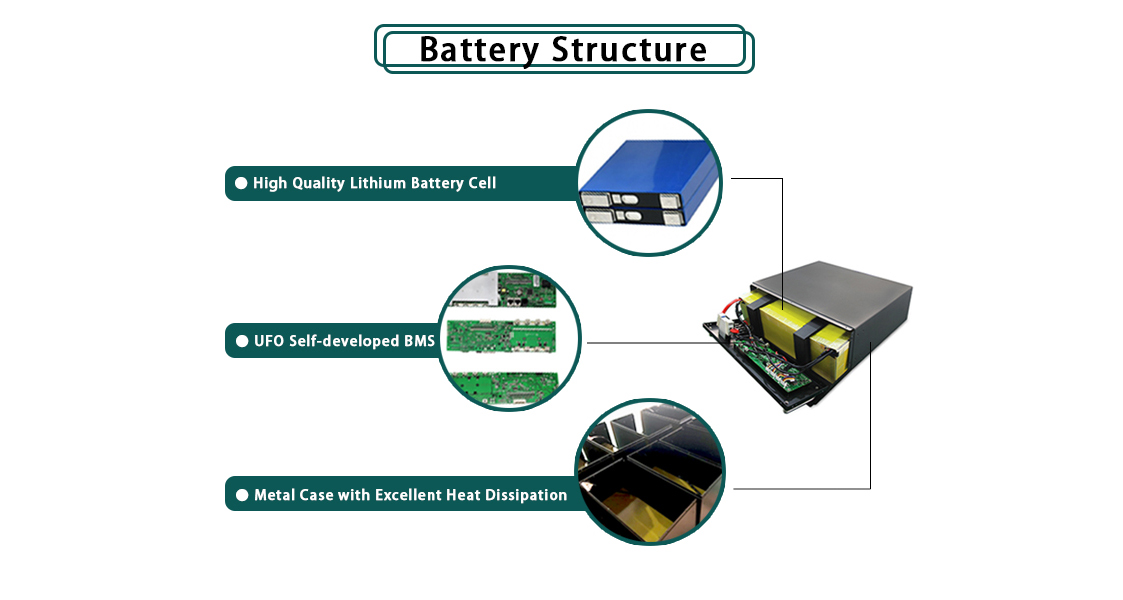

Considering that some telecommunication are built in remote areas, our engineers configure our 48V 200Ah lithium iron phosphate (LiFePO4) battery with GPRS data transmission unit which improve the convenience of battery monitoring. Meanwhile, the GPS function is an anti-theft feature offering battery tracking, which is other guarantee for the battery safety. UFO 48V 200Ah Telecom Battery can give you confidence in good and safe performance of Lithium Battery for telecom tower.

Feature of Rack Mount LiFePO4 Battery for Telecom Towers

â— Safe LiFePO4 Battery Cells

â— Modular Design for Limited Space

â— GPRS for Battery Remote Monitoring

â— Communication Function

â— Flexible capacity scalability

Protection of Rack Mount LiFePO4 Battery for Telecom Towers

â— Over charge/ discharge Protection

â— Over current Protection

â— Over voltage Protection

â— Over temperature Protection

â— Short Circuit Protection

High Efficiency Battery,Robot Battery Specification,Robot Lithium Battery Packs,Lithium Iron Phosphate Batteries

ShenZhen UFO Power Technology Co., Ltd. , https://www.ufobattery.com